I’ve sat with business owners in Bournemouth cafés, SME teams in Poole boardrooms, and corporate managers in London offices, and this question always comes up:

“Our site is fast, but Google still isn’t indexing our content. Why?”

It’s a frustration I hear a lot. You’ve invested in web design, streamlined your code, compressed your images, and earned those glowing PageSpeed scores. Your content is fresh, unique, and genuinely helpful, the kind of content that deserves a place in the results. Yet the search rankings don’t reflect the effort, and blog posts sit in limbo marked “Discovered – not indexed.”

That’s when I explain the distinction between site speed (the customer experience) and crawl speed (Googlebot’s experience). They overlap, but they’re not the same, and confusing the two can quietly hold back your visibility.

Site speed: what visitors feel in the browser

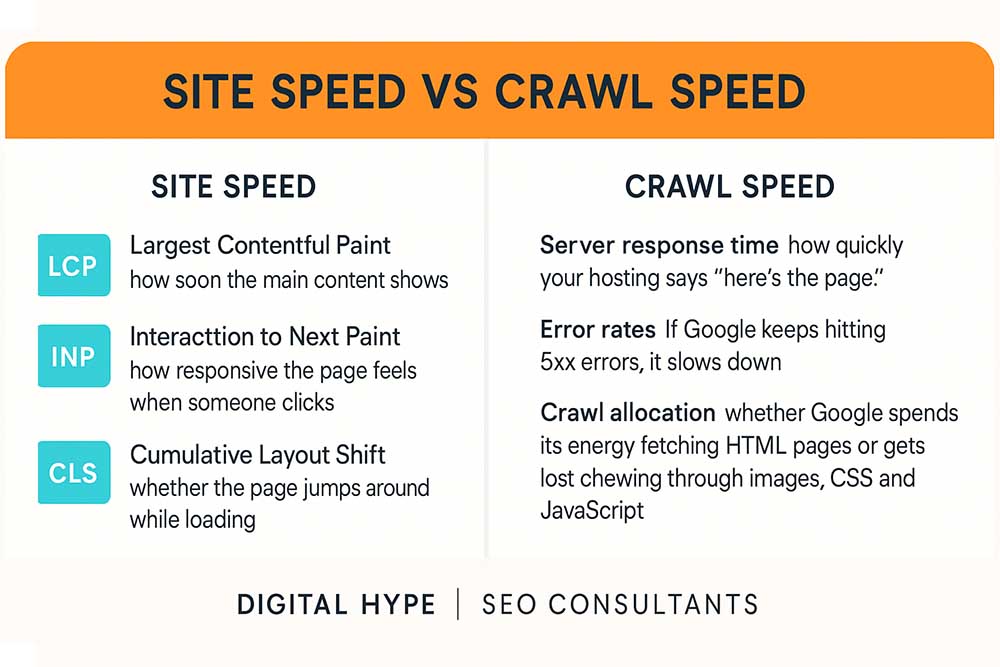

When people land on your page, they expect it to appear quickly, scroll smoothly, and respond instantly. If it doesn’t, they’re gone. That’s why Google measures Core Web Vitals like:

- LCP (Largest Contentful Paint) – how soon the main content shows.

- INP (Interaction to Next Paint) – how responsive the page feels when someone clicks.

- CLS (Cumulative Layout Shift) – whether the page jumps around while loading.

You can check all this on PageSpeed Insights or GTmetrix. If you’ve worked with a decent web design setup and proper caching, you’ll often see green results, indicating that your users are satisfied.

Crawl speed: what Googlebot sees behind the curtain

Googlebot isn’t a customer. It doesn’t admire your brand colours or hover over your calls to action. It pounds your server with hundreds of requests and times the replies.

Crawl speed is all about:

- Server response time – how quickly your hosting says “here’s the page.”

- Error rates – if Google keeps hitting 5xx errors, it slows down.

- Crawl allocation – whether Google spends its energy fetching HTML pages or gets lost chewing through images, CSS and JavaScript.

- Discovery vs refresh – is it spending crawl budget on your new content or just re-checking old pages?

You’ll find this data in Search Console → Settings → Crawl stats. For me, that’s always the eye-opener.

When the two don’t match

I’ve seen it with plenty of Dorset businesses: a site feels fast to humans but looks sluggish to Google. The usual suspects?

- WP – Cache plugins not serving bots, so crawlers always hit the slow, uncached version.

- Security software being too aggressive and throttling Googlebot.

- Shared hosting coping fine with visitors but falling over when a crawler requests dozens of pages in seconds.

- Redirect chains and 404s that waste crawl budget.

- Crawl waste on plugin files and image variants instead of your HTML content.

The end result: people see a quick site, Google sees delays, and your new posts get stuck in limbo.

Where to look first

If you’re in this situation, there are a few places I’d always check:

- Search Console Crawl stats – response time, file type breakdown, error rates.

- URL Inspection – test a new page live and see if Google confirms it’s crawlable.

- Hosting logs – look for spikes in CPU, RAM or PHP worker usage when bots visit.

- Robots.txt – is Google wasting energy on crawling non-essential files?

- Internal links – are your new pages linked from strong pages or buried away?

Actions that usually make the most significant difference

- Serve cached pages to crawlers – don’t just optimise for human visitors. Make sure Googlebot is seeing the same fast, cached experience.

- Tidy up robots.txt – let search engines reach the content and assets they need, but stop them from burning crawl budget on files or directories that don’t add value.

- Eliminate broken links and redirect chains – every wasted hop slows down crawling. Point links directly to their final destination and fix pages that return errors.

- Strengthen your hosting setup if needed – underpowered servers or tight resource limits can bottleneck crawl response. Even a modest upgrade in capacity or concurrency can have a significant impact.

- Build links from your strongest pages – whether it’s cornerstone articles, high-authority service pages, or trusted content marketing pieces, make sure new content is connected internally to the areas of your site Google already values.

- Highlight fresh content in visible hubs – feature new articles on your homepage, in category hubs, or in pillar pages so crawlers find them early and prioritise them.

Why this matters commercially

- For small businesses – if your new landing page doesn’t index for three weeks, that’s leads you’ve missed.

- For SMEs – if your content schedule isn’t being indexed in sync, your campaigns lose momentum.

- For corporates – when new features or press releases don’t show up in Google promptly, it becomes a reputational risk with stakeholders.

Fast for people, slow for Google?

We’ll help you bridge the gap between site speed and crawl speed — so your new content doesn’t sit in limbo. From caching tweaks to crawl audits, we’ll get indexing working for you.

The customer view vs Google’s view

Site Speed

- Measured by Core Web Vitals (LCP, INP, CLS)

- Reflects how fast people see and interact with pages

- Test with PageSpeed Insights, GTmetrix, Lighthouse

- Optimised via caching, image compression, and good web design

Crawl Speed

- Measured in Search Console Crawl stats

- Reflects how quickly Googlebot gets server responses

- Impacted by hosting, caching for bots, crawl waste, errors

- Critical for fast indexing of content marketing and blogs

The crawl speed questions I get asked most

My PageSpeed scores are green, why are posts still not indexed?

Because user experience and crawl efficiency are two separate systems. Your visitors see cached pages; Googlebot might not.

What’s a healthy crawl response time

Consistently under a second. If Search Console shows two to three seconds, Google slows down to avoid stressing your server.

How do I check if Googlebot is served cached pages?

Inspect response headers with a live fetch. Look for cache-hit headers. If they’re missing, bots are seeing uncached content.

Can Wordfence or other security tools hold crawling back on WP sites?

Yes. I’ve seen sites where security plugins throttled or even blocked Googlebot. Always add Google’s IPs to your allowlist.

Should I block CSS and JS files?

Don’t block them outright. Keep the files essential for rendering open, but stop Google crawling plugin directories that don’t add value.

How do internal links influence crawl?

Googlebot follows links. A new post linked from a strong SEO service page or your homepage will get picked up faster than one buried in a category archive.

Does hosting play a role?

Absolutely. Even well-optimised sites crawl slowly on underpowered shared hosting. If Crawl Stats shows 5xx errors, resources are stretched.

How soon should indexing improve once changes are made?

Often within days for key pages. On newer domains, it can take longer, but you’ll see crawl response times improve first.

Do sitemaps really matter?

Yes, but only if clean. A sitemap full of redirects, noindex, or duplicate URLs tells Google not to trust it. Keep it lean and accurate.

Is crawl speed something to monitor continuously?

Yes. For smaller businesses, a quick monthly check is enough. For SMEs and corporates, treat it as a regular SEO KPI, just like rankings or traffic.

Turning speed into visibility

It’s easy to obsess over design tweaks and PageSpeed scores, but that’s not what decides whether Google takes your content seriously. A site can be lightning fast for visitors and still look sluggish to Googlebot.

The businesses I’ve seen win at this don’t just polish their front-end; they focus on how the site behaves under crawl pressure. Make sure crawlers see cached pages, clean up crawl waste, keep error rates near zero, and build internal links that guide Google straight to new content.

Do all that, and indexing stops being unpredictable. Your content won’t sit in “Discovered – not indexed” limbo; it will start appearing in search results where it belongs. And for business owners, SMEs, or corporate teams, that’s the difference between a fast site that feels good and a site that actually performs in search.

If you’re tired of fast scores but slow indexing, our SEO Agency can help align your site with how Googlebot really works.